AI Learning Potential: Embracing Human-inspired Forgetting in Language Learning Models

A group of computer scientists developed a smarter kind of language learning model. Instead of trying to remember everything, it sometimes forgets what it learned before. This doesn’t replace the big models used in popular apps but helps us understand how these programs understand language better.

A scientist named Jea Kwon from South Korea said this new research is a big step forward.

Most of today’s language tools use something called artificial neural networks. These networks work by processing signals between different points, like how our brain works. When we teach them a new language, they get better at it by adjusting the connections between these points.

But teaching these models takes a lot of work. If they don’t learn well or we need them to learn something new, it’s tough to change them. Mikel Artetxe, who helped with this new research, said it’s like starting from the beginning if you want to add a language the model doesn’t know.

Artetxe and his team found a way around this problem. They trained a model in one language, then made it forget some basic things about words. After that, they trained it again in another language. Even though the model had some old information, it still learned the new language well.

But this method needs a lot of computer power. So, they made it better by making the model reset its memory sometimes during training. This way, it’s easier to add new languages later.

They tested this new approach with a common language learning model called Roberta. It did a little worse at first but got better when they trained it in new languages with less data. And even when they limited the computer’s power, it still did better than before.

This way of learning is like how our brains work. We don’t remember every detail; we focus on the main idea. A scientist named Benjamin Levy said this makes AI more flexible, like us.

Besides helping us understand how language works, this new method could also help smaller languages. Big companies’ AI models are good with popular languages like English and Spanish but not so good with smaller ones like Basque. Artetxe thinks this new method could help these smaller languages catch up.

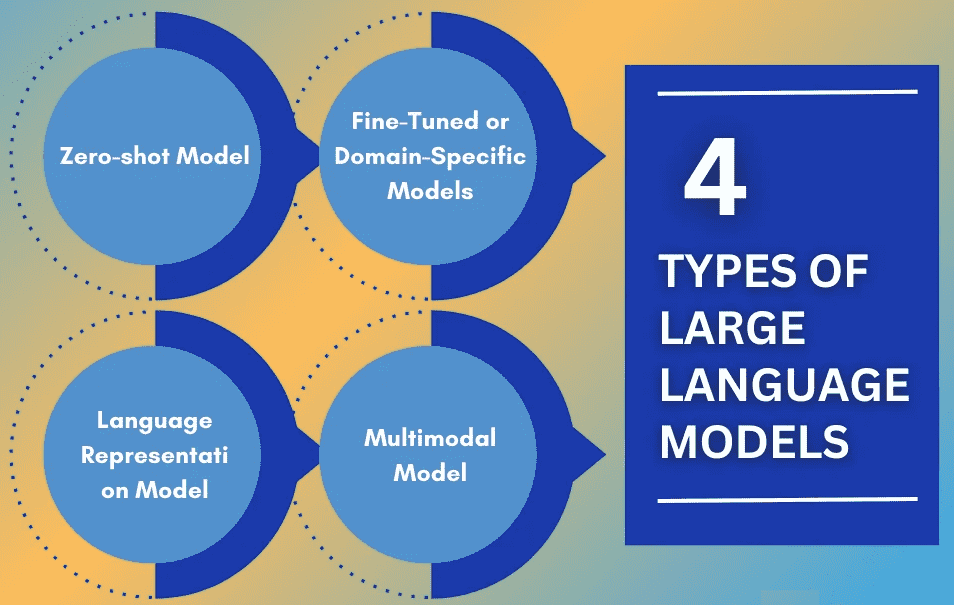

Another scientist, Yihong Chen, thinks this could mean more types of AI models for different jobs. Just like factories make different things, AI could have different models for different tasks.

AI models are revolutionizing various industries with their capabilities, visit our blogs to explore more about different AI models,.