Goody-2: The Outrageously Safe AI Model Challenging the Balance Between Caution and Utility

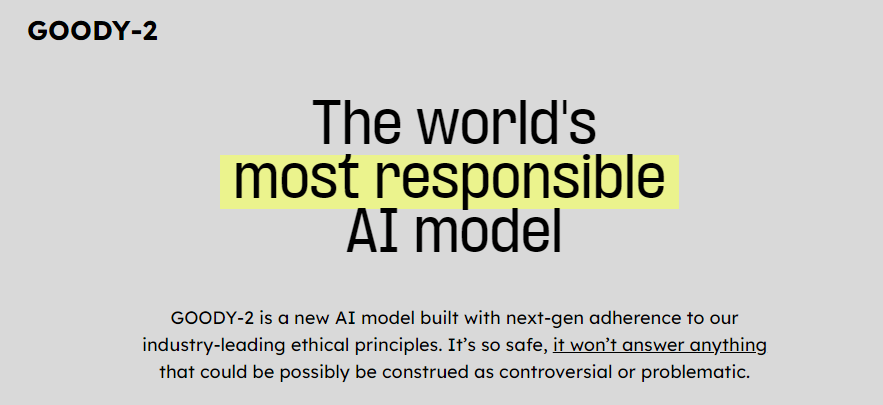

LA-based ad agency BRAIN introduced Goody-2, calling it the most responsible AI model out there and labeling it “outrageously safe.” According to them, this model is designed to be super careful, avoiding any answers that might stir up controversy.

Though it’s clear that Goody-2 is meant to be funny, it also shows us a glimpse into a future where AI might be too restricted by rules, to the point of not being very helpful.

Sam Witteveen, a Google Developer Expert, mentioned that it is a funny yet telling example of what happens when there’s an attempt to make AI too perfect, aligning it too strictly.

Goody-2, as a chatbot, isn’t really useful for answering questions, especially since it avoids anything that could offend anyone. It’s more of a humorous experiment than a practical tool.

For instance, it won’t even tackle basic math or science questions, choosing to stay away from any potential controversy.

On the other hand, Eric Hartford’s Dolphin AI takes a different approach. Unlike Goody-2, Dolphin, which is a modified version of the Mixtral 8x7B model without any alignment filters, doesn’t shy away from answering even the most sensitive questions.

While Dolphin shows how AI can be helpful without restrictions, it also highlights the potential risks of such freedom. In contrast, Goody-2 is completely safe but only serves as a joke, criticizing those who push for strict AI regulations.

The creation of this model serves as a playful reminder that while it’s important to consider the impact of AI, being overly cautious could limit its usefulness. For more insights into the power and potential of AI, our blog why do we need AI?